TO AN END USER, ONLY TWO THINGS MATTER IN AN APPLICATION: functionality and performance. Functionality has made huge strides in recent years. There's very little that can't accomplished with today's technology, and more functionality is added every day to business applications. Performance, on the other hand, continues to be a challenge. The vast amounts of data being processed can quickly swamp a customer's system and make it inefficient, slow and redundant. H ow can this situation be improved?

ow can this situation be improved?

There are seven key areas to look at if we are to design high-performance applications:

- The appropriate I/O for a specific task

- Minimizing all initiation and termination

- Designing applications to be 'interactive' (from the perspective of the user)

- Pre-summarizing and formatting data for easy access

- Thin client applications are preferred - 'fat client' is not a feature

- The developers' environment vs. the end user's environment

- Be an end user for a day!

Appropriate I/O for Specific Task

It's absolutely vital that we use the appropriate I/O for a specific tasks. In fact, in 9 out of 10 cases that I've seen, all performance issues are caused, in some measure, by inappropriate I/O. If the user is experiencing bad response time, high CPU use, memory faulting, long batch jobs, or high disk actuator arm activity, look to the application. If a million I/Os are occurring for the selection of 10 records, the result is obviously going to be bad response time, high CPU use, memory faulting, long batch job, or high disk actuator arm activity.

If you can trim that I/O down to only what is necessary to get those 10 records, you will eliminate those performance issues. Why create more work than is necessary to achieve the desired result?

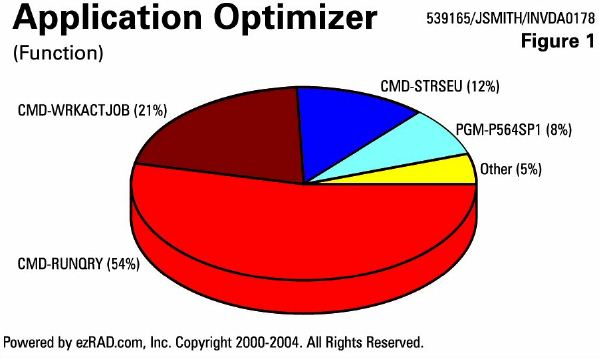

This is an example of inefficient system, with far too much unnecessary I/O dedicated to running queries instead of doing actual work. In this example, 54 percent of the resource was dedicated to a run query, and 21 percent to a WRKACTJOB command. Only 8 percent of the resource consumed by this user is actually spent performing order processing (PGM-P564SP1).

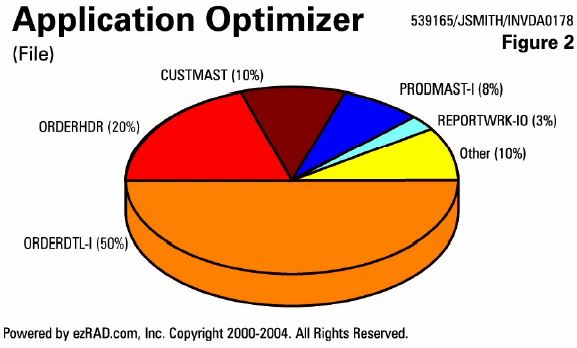

Here's another example. In this illustration, the order detail file comprises half of the I/O. A report work file only accounts for 3 percent. In all likelihood, the order detail is being read from top to bottom, checking to see if the orders are in the right status for printing on the report. There could be 7 years' worth of history in this file which is not only being read from top to bottom, but is also chaining out to the order header, customer master and product master. Then, almost all of the records are discarded because  they don't match the criteria.

they don't match the criteria.

RPG/COBOL

When designing applications in RPG and COBOL, it's very important to use logical views. An efficient, well-performing application doesn't read 100 records to select one, but uses a logical view, keyed in the correct sequence. A file can have 10 million records in it, but if you use the correct logical view, you can go to a specific position and read only the 10 records you need to present on-screen.

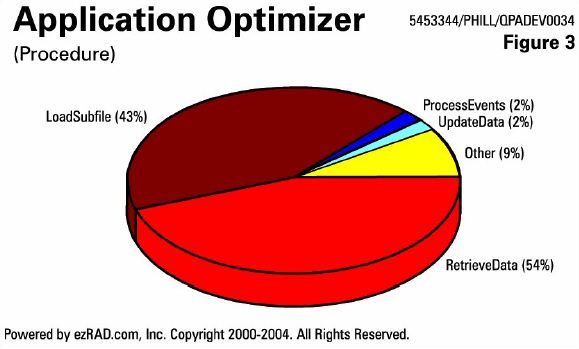

The 'page at a time' concept is important in green screen programming. Use that logical view to get the next screen's worth of information when the user pages up or down. Preloading 1000 records into a subfile and letting the user page up and down through it may be an easier programming technique, but it creates a big performance issue. Using 'page at a time' is far more efficient from a performance standpoint. Here is an example of an application that is not doing 'page at a time' subfile processing. You can see the resulting load on the system from retrieving and loading more data than is necessary.

Java/SQL

The same performance issues arise in Java and SQL. They occur as a result of table scans and re-indexing, both of which create unnecessary load on a system and are extremely inefficient. For example, the fields in the WHERE clause of the SQL statement are extremely important - it needs to match a permanent and existing index or logical file. Suppose you select all fields from the order detail file, where order number is equal to 7 (this is an overly simple example, but sufficient for demonstration purposes). If there isn't an index or a logical file over the order detail file, keyed by order number, performance goes out the window. The operating system will either do a full table scan - checking every record to match the line items you requested - or re-index the file into a temporary library, with several users simultaneously re-indexing the same file into their QTEMPs. Table scans and re-indexing should be avoided whenever possible.

Wireless

On a wireless network, it's important to minimize all activity to the bare minimum needed to get the job done. You need server-side code that filters out all of the records that aren't needed and sends out only what's needed for a particular application. In fact, many wireless applications won't work at all unless it's an extremely thin client. The palm devices, for example, have a 64k limit per application or shared library. Applications that are so limited in size are tough to write, but if they're going to perform, they must be as small as you can make them to ease the load on the wireless network.

Client/Server

In client/server, it's important that the logic is kept near the database. Don't pull large amounts of data through to the client and make the client filter through a whole order file or customer history. Have a good server component that does the filtering, that has the logic near the database, and only sends to the client the bar minimum of data that is needed for presentation.

Minimizing Initiation and Termination

RPG/COBOL

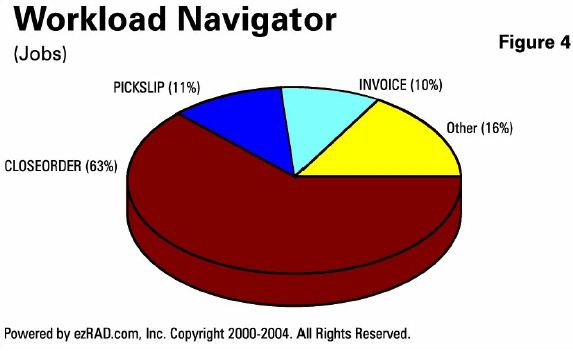

For years, you've been told that batch jobs are good, interactive is bad. The people who have preached this are selling hardware, usually, but the mentality has crept into software development. For a software developer, batch jobs can be a big problem, from a performance standpoint. If you have a batch job under a single job name (CLOSEORDER, for example) that's running 300,000 times a week, the overhead associated with that application is staggering. There are 300,000 job starts and stops occurring, as well as all the associat ed opening and closing of files, and loading of programs into and out of memory. (see Fig.4 - Workload Navigator (Jobs) In the illustration, the CLOSEORDER function is responsible for a whopping 63 percent of the jobs on the system.

ed opening and closing of files, and loading of programs into and out of memory. (see Fig.4 - Workload Navigator (Jobs) In the illustration, the CLOSEORDER function is responsible for a whopping 63 percent of the jobs on the system.

Instead of opening and closing this job all week long, make CLOSEORDER a batch job that sits in the background and stays open all week long. The programs will be left in memory all week, and the files will be open. The only thing that will be moving is a record pointer, which will choose the correct order number it received from a data queue. You've completely eliminated the initiation and termination issues.

We've seen jobs that run for three hours, due to the fact that there's an inefficient trigger. If you are attaching trigger programs to your files, this is the most important thing to remember: Don't do anything in the trigger program other than trigger. Don't run an SQL statement inside of a trigger program. Don't call out to those traditional routines inside a trigger program. Don't open any files. Simply retrieve from the before and after images that are coming into that trigger program, do a little bit of parsing of that data if you need to, and write it to a data queue with a simple one line of code, and return. Then, have a background job that does the work.

If your application uses interactive attention key programs, the same issues apply. Oftentimes you will see attention key programs initiating and terminating a separate job. Every time someone enters an order or looks up something on a list, they start and stop a new job, open and close files, and load programs in and out of memory. There is a much underutilized command called TFRCTL (transfer control). If you place TFRCTL at the end of that attention key program, you will immediately improve the performance of one of the most expensive resources on your system. This can dramatically reduce interactive load.

Java/Wireless

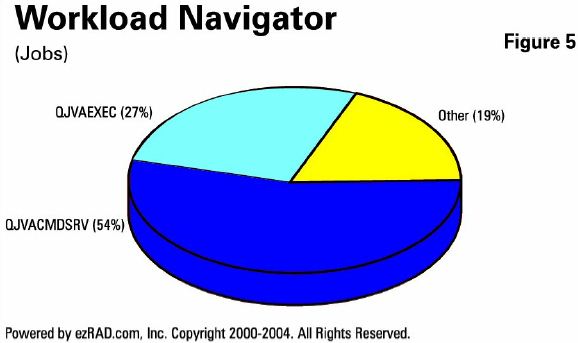

To reduce performance overhead in Java applications, use the same reasoning. Performance will be greatly enhanced if you minimize the use of QJVACMDSRV and QJVAEXEC. Instead of running 50,000 Java command servers per day, use a TCP/IP multi-threaded socket server with good socket clients that open connections, and leave them open all day long. The socket works like the data queue in the previous example, leaving files open and programs in memory so that the user can quickly access the information that's needed. These sockets can also be used with Java execute commands or when calling out to traditional RPG/COBOL business logic routines.

In the illustration (Fig.5 - Workload Navigator (Jobs), the QJVACMDSRV and QJVAEXEC jobs are using 81 percent of the CPU and I/O on the system. If you implement the kinds of changes we are discussing here, you will see that number possibly drop to less  than one percent.

than one percent.

Incidentally, you need to have good recovery logic, as well. Connections will eventually go down. And while it's easier to start and stop a connection rather than have to deal with the recovery of a suspended connection, it's not the best thing to do from a performance standpoint.

For wireless applications, the multi-threaded socket client/server applications are the answer as well. They are going to perform well wirelessly, because they minimize what's going across the wireless network.

SQL

It's not uncommon to see 60,000 SQL requests per minute over a file that has 300 records in it. This is an example of a look-up table being hammered. Instead, why not load the table into memory and access it out of an array? If you pull sets of data and deal with them locally, in memory, you will cut 60,000 SQL statements a minute.

Client/Server

Keep ODBC connections open. It's harder to program an application that way, but it will be worthwhile when your code moves into production, and suddenly there are 10 million records in the file and 300 users constantly establishing and disconnecting ODBC connections. Start the connections, and leave them open all day long.

New Development

When you're doing new development, it's important to look at existing applications. You want to learn from both the good things and the bad things. Find out what you're doing right with your existing workload and applications, and try to repeat that when your building new stuff. Use whatever existing date routines and business logic routines that you can, but optimize the calls to those routines. It isn't uncommon to see an old date routine that has been used for 15 years, but now is causing major performance problems. The reason is that that old routine is being called 2000 times a minute - interactively - and it's setting on LR each time. We've seen 8-second response time decrease to sub-second by changing that old date routine to return rather than set on LR. We've seen 12-hour batch jobs decrease to 20 minutes by doing the same thing.

Put your new development through a phase of detailed analysis to make sure that it's optimized before it gets into production. But none of us are perfect, we're going to make mistakes, we're going to do things that aren't performing optimally in production. Doing the best you can and then moving those into a test and integration type environment and running a detailed analysis. Make sure you're catching things before they move to production.

Designing Everything to be Interactive

This is a good goal to set - goals should be set high. Designing every aspect of an application to be interactive is probably an unrealistic goal, but it's definitely the direction you should head.

It's important to clarify that by 'interactive' I don't mean green-screen interactive, I mean functionality interactive - make it appear to the user that they are using an interactive application. Don't submit a huge background process to get data to present to a user. Again, only read that data which is needed for the request.

RPG/COBOL

Avoid the batch-job shortcut. Design your app to be interactive. The exception is an application that needs to get built quickly, or that runs at night and can access tons of horsepower; in that case, go ahead and write a batch routine; submit a background SQL that reads the billions of records to select a thousand. If, on the other hand, you're designing applications for performance, design them to be interactive.

Java and Client/Server

'Page at a time' is not the terminology of Java or most of the client/server applications. There are drop-down list boxes, which have pre-loaded data. You can't do page at a time with a drop down list box, so design it differently. Make it mimic page at a time subfiles. Allow the user to submit a name or part of a name to the server; let the server find the appropriate subset to put in that drop down list box that meet the filter criteria that the user typed in.

In client/server applications, GUI applications look great, but sometimes we need a reality check: GUI applications cause performance issues. There's nothing wrong with putting some images in an app to make it look good, but focus on functionality. You will have a much better performing application.

SQL

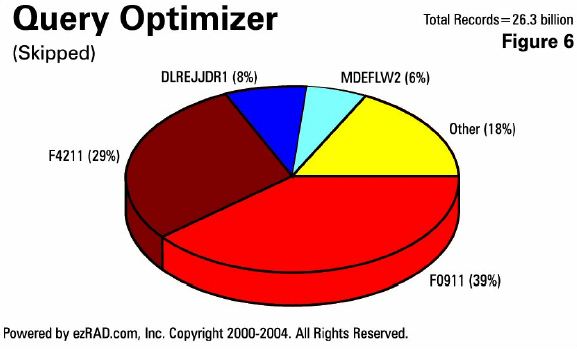

Plain and simple: if you need 10 records, make sure that the operating system is reading only 10 records. Just because you got 10 back doesn't mean that's what was read. Typically, there is a lot of unnecessary background work going on if the correct indexes didn't exist. (Fig.6 - Query Optimizer (Skipped records in SQL)

Here's a system that had 26 billion skipped records in a 24-hour period. None of these records needed to be read, since they weren't selected by the queries.

Note that 82 percent of all that unnecessary I/O is related to only four files. Avoid this mistake when writing new applications.

Wireless

Part of making wireless applications seem real-time and interactive is making sure that you're not repeatedly pulling down the same data. Store the data and the look-up tables offline. If there's a terms code lookup table that changes once a century, store it offline on the wireless device, and have a good method for knowing when it changes and have the offline copy updated. Updating it once a day/week/month is fine - not every time they go into the screen.

Pre-Summarize and Format Data

Suppose you have a web application looking for monthly sales totals. If you try to run the SQL on the fly in the web browser, it's going to perform horribly. Regardless of the type of application, pre-summarizing data is to your advantage.

Typically, traditional applications have data very normalized. There is a customer master with one record per account, and an order header file with one record per order number. That isn't necessarily the right thing to do for new development, especially web, wireless, or client/server applications. While RPG and COBOL programmers usually avoid de-normalizing data in traditional applications, it might be the right thing to do for analytical applications. Of course, there are other issues to consider besides performance, such as data integrity; de-normalizing data should be done with care, but it does have performance advantages.

In instances where providing real-time data isn't feasible, it can be effectively mimicked. If the data is pre-summarized as the data base changes, the information can be presented instantly to the user. It isn't a real-time total, but it's as good as real-time for most uses.

Thin/Fat Clients

It's been said before, but bears repeating: fat client is not a feature. In fact, with wireless applications, you must have the thinnest client possible, or your programs won't even run, much less perform well. For other types of applications, it's no less important from a performance standpoint. Limit ILE program size; use the optimization capability and remove debug data. Also, modularize your code. It's better to write several small programs that can be dynamically loaded as needed than it is to load monster 20meg programs into memory and them terminate them. The smaller programs are also useful from a reusability standpoint, as well as flexibility.

Developers Environment vs. End Users

This is an unpopular topic for most developers, but it needs to be addressed. What's wrong with this picture: We developers have the biggest, fastest, most expensive PCs made. Since it's a pain in the neck to key in data into an order file, we only make about 10 records for testing purposes. That SQL statement or that RPG program will run quickly over 10 records, of course. But if you run it over 10 million records, how will it perform? The end users have old equipment and gigantic sets of data.

Can you imagine if you switched this scenario around? As you were developing the code, you would 'feel the pain,' so to speak. You would be able to immediately see how well or how poorly your application performs under real-world circumstances. If your screen is taking a half-hour to come back, you're not going to move that application to the next level of development.

Be a user for a day

Experience what it is like to run your application on a 4 year old PC. How long does it take you to enter a transaction? How do the features and functionality really work? Receive first hand feedback on performance and functionality concerns. Gain a sense of appreciation for end-user complaints. Remember, your first ideas aren't always the best ones. Go ahead and work on implementing your first ideas, but be open to coming back and making improvements, both from a features/functionality standpoint and performance standpoint.

Conclusion

For years, we have been told that the solution to poorly performing applications was to run them on newer, faster, bigger hardware. That's just not always the case. By making a few basic changes in the way we design our applications, we can achieve top performance for the customer without costing them hundreds of thousands of dollars of unnecessary hardware upgrades.

How well are your applications running? To receive a free software performance evaluation from MB Software, register at www.mb-software.com. After a quick download and installation of software for your AS/400 or iSeries, you can collect a full business day's worth of your company's performance data for our analysis. We will then have a conference call with you to discuss our findings and how you can gain performance improvements with your existing applications.