Many iSeries and System i servers have excess CPU capacity yet experience various system and application performance issues that directly affect their end-users. You may have long running batch jobs, poorly performing SQL requests, slow browser-based and client/server applications or unacceptable interactive response times. There may be significant disk arm utilization and memory faulting occurring on the system.

Adding CPW and DASD through hardware upgrades does not seem to significantly improve things. 90% of all performance issues are because of I/O. Focus on improving the efficiency of application I/O and dramatically enhance performance and capacity prior to considering additional hardware upgrades.

Analyze and optimize various types of I/O:

- SQL and query issues from lack of proper indexing

- Journal overhead associated with global security and transaction auditing of temporary objects

- Unnecessary disk activity from generation of unused spooled file reports and excessive joblogs

- Inefficient RPG or COBOL data access methods

- Constant thrashing of resources from excessive job and/or SQL initiation and termination

Don't adjust memory settings. Don't allocate more CPU resources to your Production LPAR. Don't buy more DASD. Don't upgrade the model of your server - not yet.Optimize the performance of your application first. It takes a lot of hardware to make 1 million I/Os perform as well as 1,000 I/Os. Memory will be consumed. CPU resources and disk activity will be closer to capacity to process through your large database files. If you tune the application and database first, get the million logical reads down to the necessary reads - your application will be optimized. Now adjust CPU, memory and disk resources. Buy more, if necessary - but upgrade the correct resources. If your CPU is only 30% consumed now, buying more isn't going to help.

How do we make our SQL perform less I/O?

You can start by eliminating repeated full or partial table scans. If the IBM database monitor recommends the same index 3,000 times an hour - you ought to consider building it as a permanent index or logical file. There are many other inefficiencies though within an SQL based application. Opening and closing a database file for every INSERT within a long running batch process can also cause significant overhead. Reusing open data paths is critical in ensuring optimum performance of your SQL applications. Making sure access plans are reused and not constantly rebuilt is equally important. Using static SQL rather than dynamic can help. Not performing dynamic sorts or access path rebuilds will significantly reduce I/O as well. If excessive SQL timeouts are occurring, you might need some operating system PTFs or adjustments in the QAQQINI options file.

Our journal receivers are on a 2nd ASP - what morecan we do to improve performance of journaling?

Performing a detailed analysis of the journal receiver transactions in security audit and transaction journals often reveals the same results. 80% of the transactions are generated from 20% of the objects. Actually, 80% or more of the transactions are from 10 objects or less. Most environments that journal for security auditing purposes or for high availability needs require operating system level journaling for every object on the system. You will likely discover though that 2 million adopted authority changes per day is a little outrageous and unnecessary. Your application needs to stop changing the authority on these objects or you need to use the CHGOBJAUD command to stop the security auditing on select objects. Journaling of temporary IFS folders, reporting work files, user spaces, data areas and data queues can equally impact system I/O performance.

Can the I/O performance of an RPG application be improved without a complete rewrite?

Most I/O issues have to do with performing full scans of entire database files. If your RPG program is reading every record in a large database and checking each against criteria coded in the program - it will run long and consume a lot of resources. It does not take a complete rewrite to make this program do less I/O. You just need to make sure a permanent index or logical file exists on the system keyed by the fields in your selection criteria. Using a SETLL or CHAIN operation in the RPG code, you can position to the first record that meets your criteria. Continue to read through the data until the criteria is no longer met. Even in examples where the selection criteria is variable and not as predictable, there is always a different and more efficient data access method. Again, less I/O means less disk activity, less memory, less CPU and a more efficient process.

The Workload Performance Series software consists of several tools that can help with the identification and resolution of I/O bottlenecks on your systems.

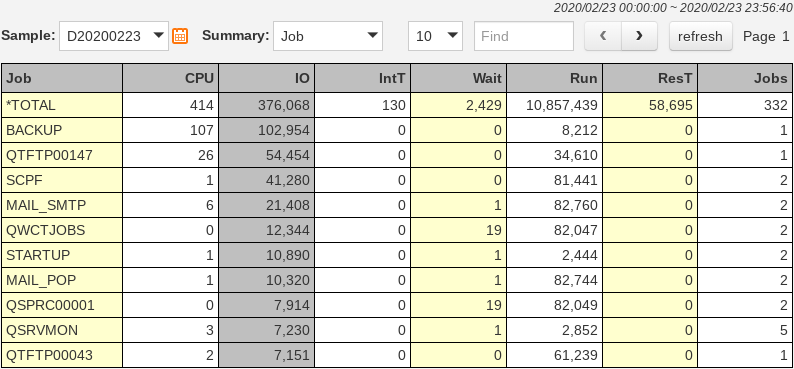

The System Navigator tool can be used to monitor CPU, memory and disk resources to pinpoint problem areas. You can easily identify the resources under constraint and the culprit jobs or users. The Workload Navigator can drill down into the problem jobs for tracking and analyzing completion history for  all jobs on the system.

all jobs on the system.

Once problem areas have been identified with these high-level tools, you can use our Query Optimizer tool to quickly find inefficient SQL and query activity on the system. Finding that one missing index responsible for 25% of the physical I/O on the system, has never been easier.

The Journal Optimizer should be used in any environment that uses operating system level journaling extensively.

For environments with application source code, the ability and willingness to change it to improve performance - the Application Optimizer can be a great tool for helping your software developers find and fix underlying code issues.

The Disk Navigator tool can be used to globally analyze all physical and logical I/O on your system - tracking statistics for all database files. The Spool Navigator should be used on occasion to analyze spooled file generation on your system - keeping joblogs and batch reports under control.

Regardless of the nature of the I/O issues in your environment, if you focus on optimizing the performance of the underlying I/O bottlenecks on your system, you can dramatically improve the responsiveness of your entire system. It is as slow as the weakest link. CPU and memory resources will never be used to capacity on an I/O bound system. Freeing up I/O bottlenecks will allow you to fully utilize your hardware investment resulting in maximum efficiency and optimum performance.

We invite you to go to our web site at http://www.mb-software.com, take us up on our Free 30 Day Trial offer or just make use of our extensive online Resource Center. White Papers and Webcasts are available which provide educational value to managers, administrators and software developers.